Big data has been on the business radar for a few years now, but in 2014 there has been a marked upswing in the use of technologies that leverage it across many industries. Companies across the board now have unprecedented amounts of both public and private data, including user profiles, consumer habits, business outputs, and proprietary algorithms.

However, access to data alone – even large amounts of it – doesn’t necessarily add value to a company. In order for big data to be useful, it needs to be smartly processed, rather than merely farmed.

In the case of hedge funds, data processing is a process that occurs throughout the entire lifecycle of a trade, with data being taken from external sources such as exchanges and brokers, modified and adjusted in execution, and captured in the form of snapshots to please the reporting demands of regulators and institutional investors.

Partly because of the constantly changing demands for transparency and compliance, smarter data management can be expensive and time-consuming. However, this is now essential to good fund management, and taking advantage of the latest technologies and staying abreast of changes to the data ecosystem are things that no hedge fund can afford to ignore. And once the new data ecosystem has been embraced, the opportunities it presents to hedge fund managers more than justify the outlay.

A holistic approach

In the past, data management was separated into front, middle, and back office functions. However, in the new environment, funds are taking a more holistic approach to data inflows and outflows, streamlining compliance issues and enhancing the information set that surrounds their positions.

Rather than approaching operations as if they were a one-way pipeline, funds are starting to see their operations in terms of being an ecosystem. This houses converging cross-office data functionalities that are near-simultaneous activities, beyond the linear progression of the traditional lifecycle.

Risk is becoming a front-office issue, portfolio management is the same as it ever was, and compliance is everywhere. In the past, traders had to get their risk officer to sign off on something, and then spend hours updating the systems, colleagues, and more recently investors of the impacts that it will have on performance and risk. Now that the data map has changed, it’s time for a new hedge fund model.

A new model for data

The new operational model employed by the hedge fund manager of today is a redefinition of the concept of ‘platform’, using the cloud and a single set of accounts to enable important functions to happen in real time. In the current data-management age, where cross-office functionality is a reality, the operational models employed are required to house, curate, and level-off information sets as they occur.

In addition to managing ever-larger amounts of market data, funds also have to take care of performance reporting, risk projections, disaster planning, and partitioned client data. In order to do this, funds need to employ a model that supports automation where appropriate.

In the new hedge fund reality, real-time, continuous actions are the new normal, with funds being expected to understand, identify, and take advantage of opportunities as they occur. From a data standpoint, real-time is only a point on a larger continuum of activity that occurs when a participant observes or captures a single event in time.

By contrast, continuous processing is the underlying current that accepts and captures, or rejects data inflows and outflows. With more and more pressure being applied by regulators and investors, managers need to be able to rely on continuous, automated services, processes, and technology to support their business.

With the increase in the amount of data has come a corresponding rise in the amount of different sources and types of data. This means that systems that were previously only confronted with one or two sources of data at a time now have to deal with a much more complex interchange of information from various sources including counter parties, exchanges, fund admins, and primes.

There is, however a process that can guarantee the safe passage of these data packets, regardless of origin, and this is called normalisation. This allows for more accurate pricing and valuations for portfolio managers to use when evaluating trades, as well as providing better analysis and reporting to investors and regulators.

Another key requirement of the new model is the need to investigate and utilize historical, security-level data that is unique to the fund. By getting hold of all the information since the fund’s inception, it can provide managers with much more insight into the efficacy of their strategies, and help to inform the creation and implementation of strategy going forward.

Although data trafficking, shaping, and viewing are relatively benign activities, data management really comes into its own with regards to the uncovering of, and recovering from, adverse events and the protection of investor interests. By keeping client data from being co-mingled with a firewall, it can prevent major security problems from occurring. As a general rule, cloud technology is better for this, as not only does it provide secure and convenient access, but it also provides a back-up should anything happen to the primary data store, such as a natural disaster or a power outage.

Data management – a differentiator

Going forward, those funds that can boast solid, modern infrastructures will be in a much better position to leverage the potential of big data and protect themselves from unforeseen events. All of this can be done using smarter automated services – both maintaining precision and valuations and nurturing ongoing processes – which can be accessed in real-time from anywhere in the world.

At the moment, many of the market solutions available do not incorporate modern capabilities such as cloud technology and multi-tenant architecture, using outdated legacy systems and separate databases. With this type of technological architecture, funds will struggle to meet regulatory compliance, provide their investors with the type of information that they require, and may miss opportunities that better-equipped funds are in a position to exploit.

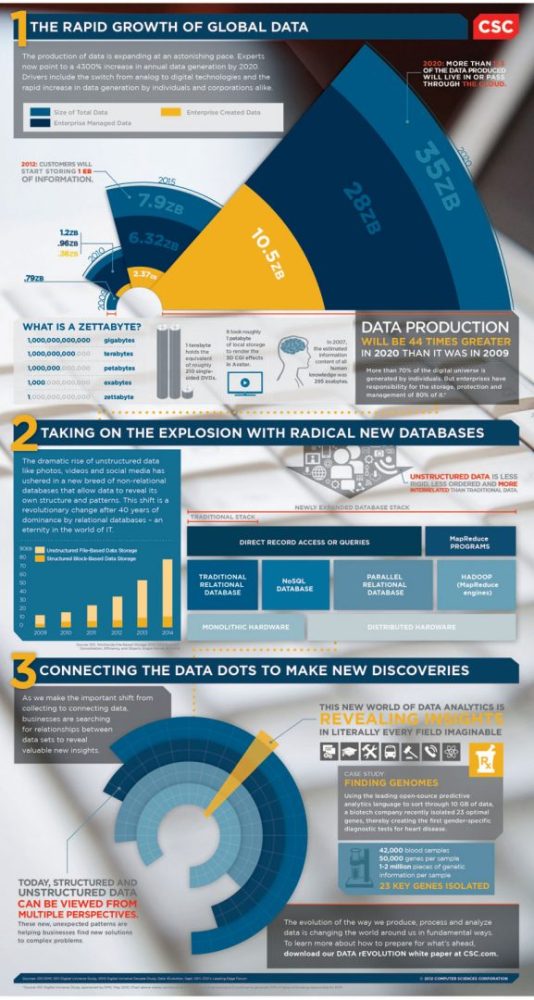

To illustrate some of the points made in this article, here is an infographic from CSC that demonstrates how much big data has redrawn the map for businesses.

Read More:

best hedge fund managers of all time

best performing hedge funds 10 years

top 10 biggest hedge funds us 2024

I am a writer based in London, specialising in finance, trading, investment, and forex. Aside from the articles and content I write for IntelligentHQ, I also write for euroinvestor.com, and I have also written educational trading and investment guides for various websites including tradingquarter.com. Before specialising in finance, I worked as a writer for various digital marketing firms, specialising in online SEO-friendly content. I grew up in Aberdeen, Scotland, and I have an MA in English Literature from the University of Glasgow and I am a lead musician in a band. You can find me on twitter @pmilne100.